When using PyTorch for deep learning tasks, it’s important to check if your code is utilizing the powerful computation of GPUs. This article discusses various methods in PyTorch to verify GPU availability and view hardware specifications. It also addresses common issues and provides solutions to ensure your models train using GPU acceleration.

From importing libraries to viewing device information, this guide covers different ways to check for an active CUDA-enabled GPU in PyTorch. It also demonstrates how to list multiple GPU details and set the default device. By following these steps, you can confirm PyTorch leverages your GPU’s parallel processing capabilities.

Whether working with a single GPU or multiple ones, verifying availability is essential for deep learning in PyTorch. This article explores diverse techniques ranging from basic import checks to more advanced functions. Clear code samples, explanations, and solutions help overcome common errors and optimize model training.

How do I check if PyTorch is using the GPU?

To check if PyTorch is utilizing the GPU, you can print the device currently in use. PyTorch will automatically detect available GPUs and place tensors on them by default. Undervolting the GPU can help optimize power usage and heat generation during training without significantly impacting performance.

To print the current device, call torch.cuda.current_device():

import torch

print(torch.cuda.current_device())

This will return the integer index of the device. For the CPU it will return -1. You can also call the device on a tensor to check which device it’s on.

Pytorch Check GPU?

There are a few main ways to check GPU availability in PyTorch:

- Check that PyTorch was imported with CUDA support by printing torch.__cuda__:

import torch

print(torch.__cuda__)

- Check if any GPUs were detected by printing torch.cuda.is_available():

print(torch.cuda.is_available())

- Get the name and properties of the primary GPU by printing torch.cuda.get_device_name(0) and torch.cuda.get_device_properties(0).

- Try moving a tensor to the GPU and check if it works without errors.

These validity checks help confirm your code can leverage GPU hardware acceleration with PyTorch.

Requirements:

- PyTorch installed with CUDA support

- Nvidia GPU with latest CUDA/CUDAToolkit drivers

- CUDA-capable GPU(s) with at least 3GB VRAM

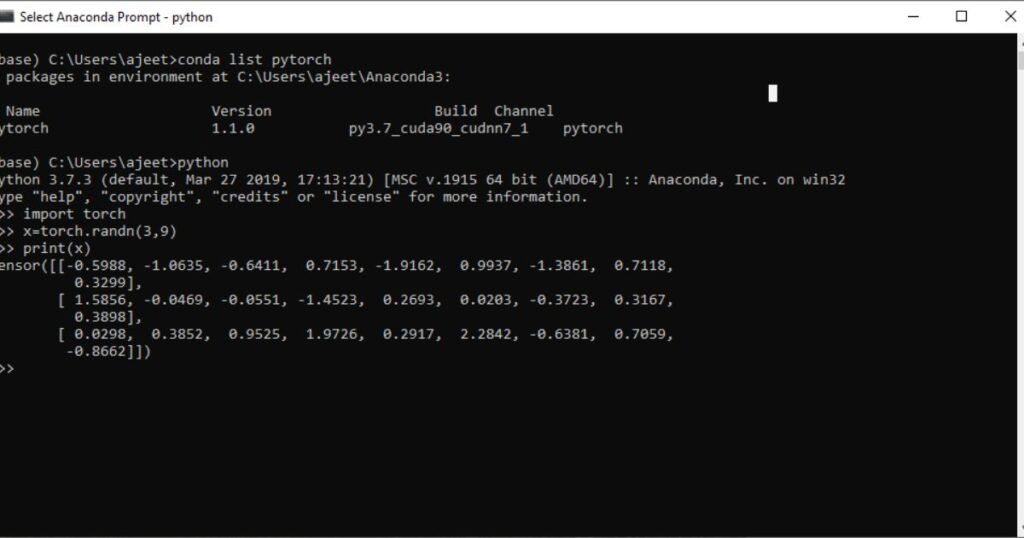

PyTorch Installed:

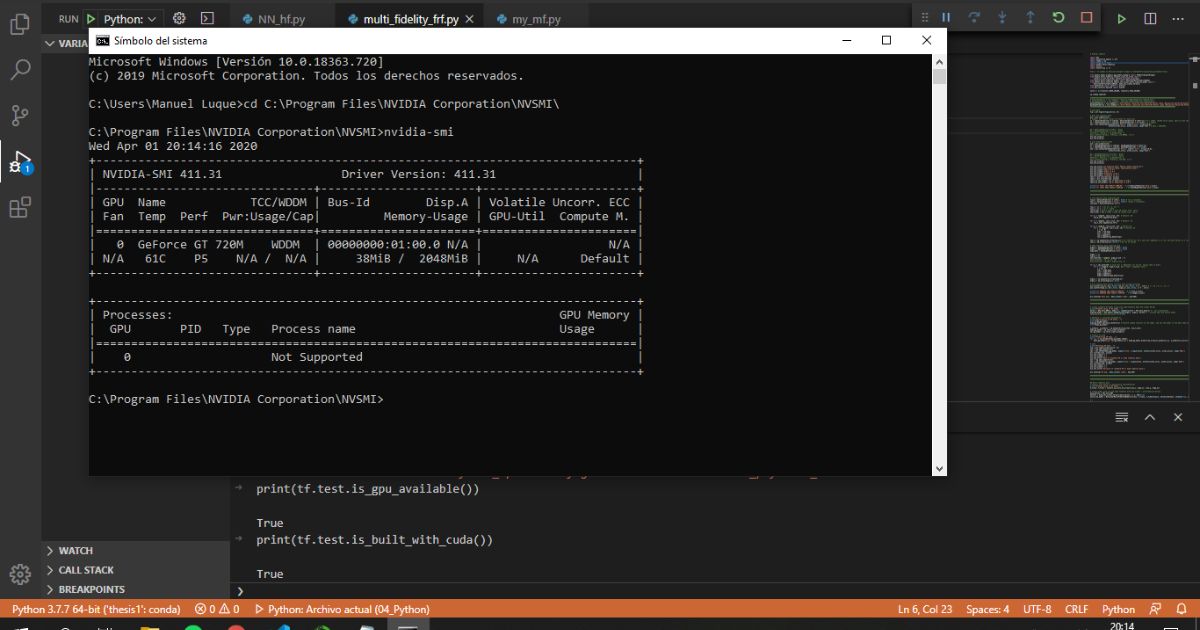

Verify PyTorch was compiled with CUDA extensions enabled for GPU support. On Linux/Mac, ensure the -c cuda installation flag was used. For Windows, check the PyTorch download matches your CUDA version.

CUDA Drivers:

Install the latest graphics drivers from Nvidia for your GPU model. Confirm CUDA and CUDAToolkit are fully updated for PyTorch compatibility. Reboot after driver installations.

GPU Information:

Check your GPU name, VRAM size, compute capability and that the device is visible to PyTorch with the methods covered. Some GPUs may be unsupported.

Checking GPU Availability in PyTorch?

There are a few main approaches to programmatically check if a GPU is available in PyTorch:

- Import PyTorch and check if CUDA is enabled:

import torch

print(torch.cuda.is_available())

- Check the device type of a tensor:

x = torch.randn(5, 3)

print(x.device)

- Get properties of the primary GPU if available:

print(torch.cuda.get_device_name(0))

print(torch.cuda.get_device_properties(0))

- Try moving a tensor to the GPU and catch any errors:

x = x.to(‘cuda’)

These methods allow Python code to verify PyTorch GPU integration and take appropriate actions.

Import PyTorch:

Import PyTorch and check for CUDA extension:

import torch

print(torch.__cuda__)

If CUDA is detected, this will output True.

Check for GPU:

Check if a CUDA-capable GPU is available:

print(torch.cuda.is_available())

True means a GPU was found, False indicates only CPU usage is possible.

GPU Information:

Get details of the primary GPU like name, memory, compute capability:

print(torch.cuda.get_device_name(0))

print(torch.cuda.get_device_properties(0))

Pytorch Check If Cuda Is Available?

One of the simplest ways to check if CUDA is available in PyTorch is to directly check the torch.__cuda__ attribute after importing the package:

import torch

print(torch.__cuda__)

If CUDA support is present, this will return True. Otherwise it will be False, indicating you only have CPU support.

You can also check if PyTorch detected a CUDA-capable GPU by using the torch.cuda.is_available() method:

print(torch.cuda.is_available())

This avoids directly introspecting the package and just checks the result of PyTorch’s GPU detection steps. Both approaches allow checking CUDA support programmatically.

How do I know if my GPU is available in PyTorch?

There are a few different ways to check if your GPU is available for use in PyTorch:

- Check torch.cuda.is_available():

import torch

print(torch.cuda.is_available())

True means a GPU was detected, False means only CPU is available.

- Print the current device:

print(torch.cuda.current_device())

It will return the device index (-1 for CPU).

- Get properties of device 0:

print(torch.cuda.get_device_name(0))

- Try moving a tensor to the GPU:

x = x.to(‘cuda’)

It will throw an error if no GPU found.

These methods let you validate PyTorch is utilizing your Nvidia GPU rather than just the CPU.

Find out if a GPU is available?

There are a few main approaches to programmatically check for an available GPU in PyTorch:

The first option is to check the torch.cuda.is_available() function:

import torch

if torch.cuda.is_available():

print(‘CUDA is available!’)

This will return True if a CUDA-capable GPU is detected.

You can also check the device of a tensor after moving it to the GPU:

x = torch.tensor([1,2,3]).to(‘cuda’)

print(x.device)

It will print ‘cuda:0’ if no error occurs.

Finally, get properties of the primary GPU if one exists:

print(torch.cuda.get_device_name(0))

Any of these methods allow your code to programmatically verify a GPU can be utilized with PyTorch.

Find out the specifications of the GPU(s)?

To get information on the available GPU(s) in PyTorch, you can use the torch.cuda.device_count() and torch.cuda.get_device_properties() functions.

For example:

| Device ID | Name | Memory (GB) | Compute Capability |

| 0 | Tesla K80 | 12.59 | 3.7 |

| 1 | GeForce GTX 1080 | 8 | 6.1 |

num_gpus = torch.cuda.device_count()

for i in range(num_gpus):

props = torch.cuda.get_device_properties(i)

print(“Device ID: “, i)

print(“Name: “, props.name)

print(“Memory: “, props.total_memory/1024**3, “GB”)

print(“Compute Capability: “, props.major, “.”, props.minor)

This allows you to get details of each available GPU for task assignment or hardware verification.

Why is PyTorch not detecting my GPU?

Some potential reasons PyTorch may fail to detect your GPU include:

- PyTorch was installed without CUDA support. Check that CUDA was included during installation.

- Outdated graphics drivers. Update to the latest version from Nvidia for your GPU.

- Incompatible CUDA/CuDNN version. Verify PyTorch, CUDA, and CuDNN versions all match.

- Insufficient GPU memory. Some GPUs must have >3GB VRAM for PyTorch.

- GPU hardware issues. Try different PCIe slots or test the GPU on another system.

- Mixed GPU types. Using multiple Nvidia models may cause issues.

- Virtual machine settings. Passthrough must be configured for VM GPU access.

Do I need to install CUDA for PyTorch?

Yes, CUDA needs to be installed separately from PyTorch for GPU support. Even if you install PyTorch via pip or conda, it will only provide CPU functionality without CUDA.

To leverage your Nvidia GPU with PyTorch, you must:

- Download and install the latest CUDA toolkit for your GPU model from Nvidia.

- Make sure the CUDA and CuDNN versions match your PyTorch install.

- Rebuild/reinstall PyTorch after installing CUDA.

Without CUDA, PyTorch will not initialize any GPU-related functionality and remain CPU-only. So ensuring CUDA is present is critical for utilizing GPU acceleration.

How do I list all currently available GPUs with pytorch?

To list all GPUs available to PyTorch, you can use the device_count() and get_device_properties() methods:

num_gpus = torch.cuda.device_count()

print(“Number of available GPUs:”, num_gpus)

for i in range(num_gpus):

props = torch.cuda.get_device_properties(i)

print(“Device Id: “, i)

print(“Memory Clock Rate (GHz):”, props.memory_clock_rate)

print(“Memory Bus Width (bits):”, props.memory_bus_width)

print(“L2 Cache Size (KB):”, props.l2_cache_size)

This will print out detailed technical specifications of each detected GPU like memory speed, bus width, cache size etc. You can then verify the detected hardware matches your systems actual GPU configuration.

How to make code run on GPU on Windows 10?

To run PyTorch code on a GPU in Windows 10:

- Install CUDA Toolkit for your GPU

- Install CuDNN for the CUDA version

3. Set environment variables:

- CUDA_HOME

- CUDA_PATH

- CUDA_DEVICE_ORDER

- CUDA_VISIBLE_DEVICES

4. Install PyTorch built for CUDA (pip/conda)

5. Import torch

– Check torch.cuda.is_available()

6. Move a tensor to the GPU

– tensor.to(‘cuda’)

7. Run code

– PyTorch will use GPU by default

8. Check device

– tensor.device

Following these steps ensures PyTorch leverages your Nvidia graphics card’s computational power on Windows.

How to run PyTorch on GPU by default?

To ensure PyTorch runs all tensors and operations on the GPU by default, you need to set the current device.

- At the start of your code, add:

import torch

device = torch.device(‘cuda’ if torch.cuda.is_available() else ‘cpu’)

This checks if CUDA is available and sets the device variable accordingly.

- Then create tensors like:

x = torch.rand(5,3).to(device)

Any subsequent tensors and modules will now utilize the GPU by default.

- You can also explicitly set the current device:

torch.cuda.set_device(0)

This ensures optimal GPU performance without manual device handling for each object.

Is a GPU available?

To check for an available GPU in PyTorch, you have a few main options:

- Check torch.cuda.is_available():

if torch.cuda.is_available():

print(‘GPU is available’)

- Get the device properties of GPU 0:

props = torch.cuda.get_device_properties(0)

- Try placing a tensor on the GPU:

tensor = tensor.to(‘cuda’)

- Print the current device:

print(torch.cuda.current_device())

Any of these techniques allow your code to dynamically check for a usable GPU and take appropriate actions like training on CPU if none present.

Use GPU in Your PyTorch Code?

To utilize a GPU in your PyTorch code, there are a few key steps:

- Import PyTorch and check torch.cuda.is_available()

- Create tensors using torch.Tensor or explicitly place on GPU:

x = torch.rand(5,3).to(‘cuda’)

- Move existing CPU tensors:

x = x.to(‘cuda’)

- Define models, loss functions etc – they will now be on GPU

- Transfer data to GPU if needed:

inputs = inputs.to(‘cuda’)

- Run optimizer and loss calculations

- Check tensors were on GPU:

print(x.device)

Following these practices ensures PyTorch efficiently leverages GPU parallelism for superior deep learning performance.

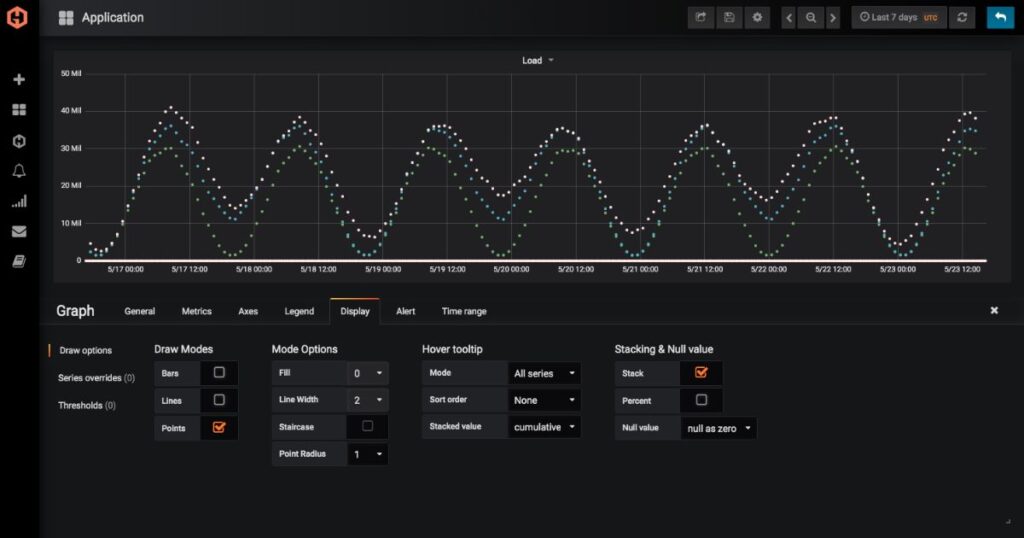

Monitoring Your Metrics

| Metric | Command |

| GPU Temperature | Nvidia-smi –query-gpu=utilization.gpu –format=csv,noheader |

| GPU Utilization | Nvidia-smi –query-gpu=memory.used –format=csv,nounits,noheader |

| GPU Memory Usage | Nvidia-smi –query-gpu=fan.speed –format=csv,noheader |

| GPU Fan Speed | nvidia-smi –query-gpu=fan.speed –format=csv,noheader |

Running these periodic queries helps track metrics and catch any overheating or throttling issues during PyTorch training.

FAQ’s

1. How to get available devices and set a specific device in Pytorch-DML?

With PyTorch-DML, torch.dml.get_devices() can be used to retrieve available devices, and torch.dml.set_device(device) can be used to set a specific device.

2. Installing Pytorch with ROCm but checking if CUDA is enabled. How can I know if I am running on the AMD GPU?

You can use torch.cuda.is_available() to check for AMD GPU usage before installing PyTorch with ROCm. You are utilizing an AMD GPU with PyTorch if the result is True.

3. How to check if your pytorch / keras is using the GPU?

Use torch.cuda.is_available() for PyTorch or tf.config.list_physical_devices(‘GPU’) for TensorFlow/Keras to determine whether PyTorch or Keras is using the GPU. When possible, it makes use of the GPU.

4. Can I use PyTorch on a machine without a GPU?

It is possible to utilize PyTorch on computers without a GPU. It will immediately revert to performing computations on the CPU.

5. What if I have multiple GPUs? How do I select a specific one?

To choose a certain GPU by index, use torch.cuda.set_device().

6. How To Check If PyTorch Is Using The GPU?

The command “torch.cuda.is_available()” can be used to determine whether PyTorch is utilizing the GPU. PyTorch is set up to use the GPU if this is the case.

7. Check if CUDA is Available in PyTorch?

Use the command “torch.cuda.is_available()” to see if CUDA is available in PyTorch. If the outcome is True, GPU support for CUDA is available.

8. How to check your pytorch / keras is using the GPU?

Use “torch.cuda.is_available()” for PyTorch or “tf.config.list_physical_devices(‘GPU’)” for Keras to determine if either program is using the GPU.

9. Not using GPU although it is specified?

Several issues could be at play if precise instructions are not being followed and a specific GPU is not being used. Incorrect driver installations, incompatible CUDA versions, or mismatched library dependencies are common culprits.

Conclusion

PyTorch provides several methods to validate GPU availability for deep learning tasks. Checking device information, verifying CUDA support on import, and reviewing hardware specifications confirm your code has proper access. Being able to programmatically detect GPUs avoids training issues from insufficient setup.

Common errors like missing drivers, outdated dependencies, or incompatible hardware can block PyTorch’s GPU usage. Resolving such problems through updated installations ensures models leverage parallel processing power. With the techniques shown, developers can quickly determine if PyTorch will utilize available accelerators.

Efficiently training on GPUs allows exploring larger models and datasets and speeds up experimentation. Verifying PyTorch’s GPU integration through different checks optimizes deep learning workflows to take full advantage of hardware resources. This leads to faster results and insight from powerful parallel computation resources.