GPU debug layers are like a superhero suit for your graphics card, helping catch and fix problems. Think of them as special goggles that allow developers to see into the inner workings of the GPU. These layers highlight issues, making it easier for creators to spot and solve glitches in games or applications. In simple terms, GPU debug layers are the tech-savvy sidekicks that ensure your graphics experience stays smooth and trouble-free.

Ever wondered: What happens if I turn on GPU debug layers? It’s like giving your game a magic wand to fight glitches. Activate it, and watch as your graphics card becomes a superhero, fixing issues for a smoother gaming journey. Ready for a hassle-free gaming experience? Try enabling GPU debug layers and let the magic unfold.

Enabling GPU debug layers is like giving your graphics card special glasses to spot and fix issues in games or applications. It’s a behind-the-scenes superhero move, revealing glitches that might go unnoticed. By turning on GPU debug layers, you empower your system to enhance performance, ensuring a smoother and more enjoyable experience with fewer hiccups. Think of it as a tech-savvy way to level up your gaming adventure.

The Importance of GPU Debug Layers

GPU debug layers play a crucial role in identifying and resolving issues within graphics applications. These layers act as a diagnostic tool, providing developers with insights into the rendering process and helping to pinpoint potential errors or inefficiencies. The importance of GPU debug layers lies in their ability to streamline the debugging process, allowing developers to create more stable and efficient graphics applications.

Ensuring the smooth functioning of GPU debug layers is essential for maintaining the integrity of graphics applications. Developers rely on these layers to detect issues such as resource leaks, incorrect rendering commands, or inefficient memory usage. By addressing these concerns early in the development process, GPU debug layers contribute significantly to the overall quality and performance of graphics applications.

Benefits of Enabling GPU Debug Layers

Enabling GPU debug layers brings forth a myriad of benefits for developers and the overall performance of graphics applications. These layers provide real-time feedback, allowing developers to identify and rectify issues promptly. From detecting memory leaks to uncovering problematic rendering commands, GPU debug layers offer a comprehensive view of application behavior, resulting in more robust and reliable graphics software.

The benefits extend beyond the debugging phase, contributing to enhanced user experiences. With GPU debug layers enabled, developers can fine-tune graphics applications for optimal performance, ensuring smoother frame rates and responsiveness. This proactive approach to debugging leads to applications that not only meet but exceed user expectations, emphasizing the significant advantages of incorporating GPU debug layers in the development process.

Table: Key Benefits of GPU Debug Layers

| Benefit | Description |

| Real-time Issue Identification | Detects and highlights issues in graphics applications as they occur, facilitating prompt fixes. |

| Enhanced Application Performance | Allows developers to optimize graphics applications for improved frame rates and responsiveness. |

| Comprehensive Debugging Insights | Provides a detailed view of application behavior, aiding in the identification of potential problems. |

Potential Drawbacks and Considerations

While GPU debug layers offer substantial benefits, developers should be aware of potential drawbacks and considerations. Enabling these layers can introduce a performance overhead, impacting the application’s speed and responsiveness.

Considerations also include compatibility with different GPUs and drivers. Developers must test and validate the functionality of GPU debug layers across a range of hardware configurations to ensure a consistent debugging experience. Despite these considerations, the advantages of having a robust debugging tool often outweigh the potential drawbacks, making GPU debug layers an invaluable asset in the development toolkit.

Use Cases and Scenarios

GPU debug layers find application in various scenarios and use cases throughout the development lifecycle. During initial development phases, these layers aid in identifying and resolving issues early, preventing potential bottlenecks. In testing and quality assurance, GPU debug layers provide crucial insights, ensuring that applications meet performance expectations across different hardware configurations.

In scenarios where applications undergo updates or modifications, GPU debug layers prove instrumental in maintaining stability. Whether addressing compatibility issues or optimizing performance for new features, having these layers enabled allows developers to iterate rapidly and deliver reliable graphics applications to end-users.

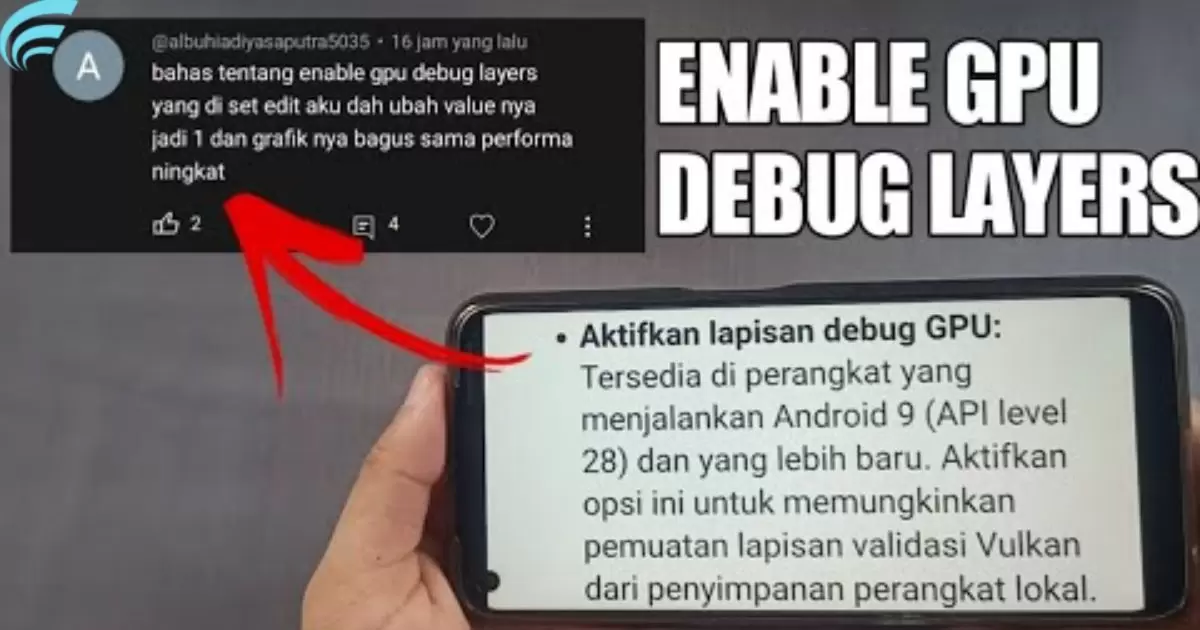

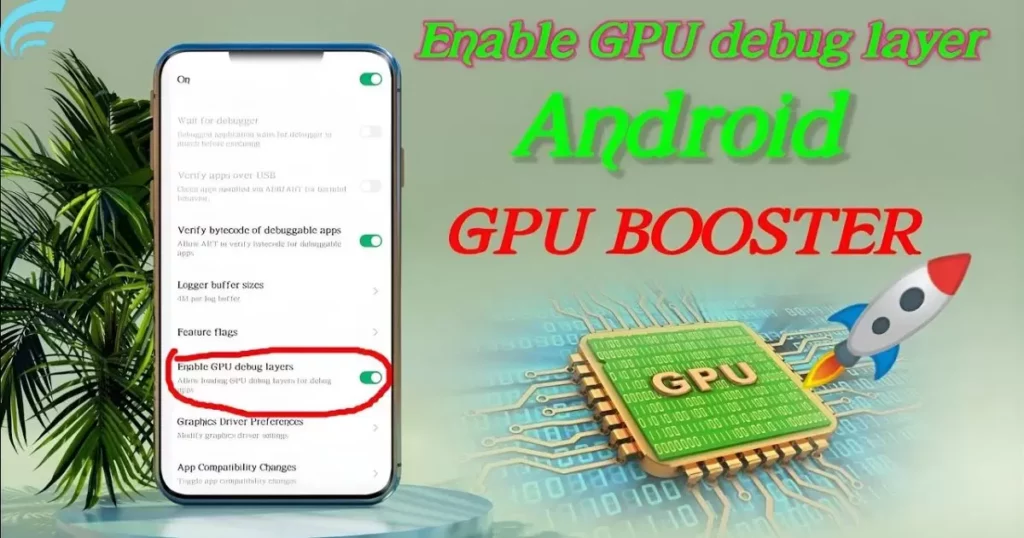

How to Enable GPU Debug Layers

Enabling GPU debug layers involves specific steps to integrate this diagnostic tool seamlessly into the development process. Developers typically configure their development environments to activate these layers during the compilation and execution of graphics applications. Utilizing development frameworks and APIs that support GPU debug layers simplifies the process, allowing developers to access debugging insights effortlessly.

It’s crucial to consult the documentation of the chosen graphics API and development environment for specific instructions. Once enabled, GPU debug layers actively monitor and provide real-time feedback during application execution, ensuring a comprehensive debugging experience.

Tips and Best Practices

Incorporating GPU debug layers effectively requires developers to follow tips and best practices to maximize their benefits. Regularly checking for updates and patches from GPU manufacturers or development framework providers ensures compatibility with the latest hardware and software configurations. Additionally, maintaining a balance between the depth of debugging and runtime performance is crucial for an optimal development experience.

Developers should leverage logging and profiling tools in conjunction with GPU debug layers to obtain a comprehensive view of application behavior. Establishing a systematic approach to debugging, including thorough testing across various scenarios, helps uncover and address potential issues systematically. By adhering to these tips and best practices, developers can harness the full potential of GPU debug layers while minimizing any associated challenges.

Real-World Impact

The real-world impact of enabling GPU debug layers is evident in the quality, performance, and reliability of graphics applications. Developers armed with the insights provided by these layers can address issues proactively, resulting in applications that deliver exceptional user experiences. The debugging process becomes more efficient, reducing development cycles and accelerating time-to-market for graphics software.

The impact extends beyond the development phase to end-users who benefit from applications free of rendering glitches, crashes, or performance bottlenecks. GPU debug layers contribute to the overall health of graphics applications, making them more resilient to varying hardware configurations and ensuring a consistent and enjoyable user experience.

Is the OC GPU worth it? Well, just as GPU debug layers enhance the reliability of graphics software, overclocking your GPU can potentially improve performance for those seeking an extra boost in their gaming or creative endeavors. The real-world impact underscores the significance of integrating GPU debug layers into the development workflow for robust and reliable graphics software.

FAQs

How do GPU debug layers improve graphics performance?

Enabling GPU debug layers enhances graphics performance by identifying and fixing issues in real-time, ensuring a smoother visual experience.

Are there potential downsides to enabling GPU debug layers?

While rare, enabling GPU debug layers may slightly impact system resources and could lead to compatibility issues with specific applications.

In what scenarios are GPU debug layers particularly beneficial?

GPU debug layers shine in scenarios where users encounter graphics glitches or errors, providing valuable insights for troubleshooting and diagnostics.

How do I enable GPU debug layers on my system?

Enabling GPU debug layers involves accessing the settings of your graphics API.

Conclusion

In wrapping up the mystery of GPU debug layers, you might wonder, What happens if I enable GPU debug layers? It’s like granting your graphics card superhero vision glitches that are instantly spotted and swiftly fixed. Enabling GPU debug layers is the tech-savvy way to ensure your gaming or visual experience is smoother and glitch-free. It’s not just about fixing errors; it’s about unlocking the full potential of your graphics card.

So, if you’re curious about diving into a world where graphics issues vanish, don’t hesitate to activate GPU debug layers and watch your visual adventures transform into a seamless and trouble-free journey. It’s the secret sauce for a graphics experience without the hiccups.